Today, we watch TV shows and movies over streaming services more than ever before. Because people enjoy quality content from all over the world, there is an increase in the demand for localization. Have you watched Squid Game on Netflix? What about SEE from Apple TV+? If you have been enjoying foreign content lately, you may wonder how these translated subtitles were created. Let’s explore the process and how XL8’s technology helps.

For the first step, we transcribe the media in its original language. For example, if a program’s original language is English, we will create subtitles in English. People might initially think of this as closed captions, but a subtitle is a little bit different. Closed captions generally have more information for Audio Description (AD) while subtitles don't. This type of subtitle can be called a “template subtitle”, which means the dialog with no additional descriptors. Usually, after creating template subtitles, closed captions are created by adding additional information such as [sound effects].

In the traditional localization process, people watch or listen to media, transcribe the dialog, and add time codes for each sentence. Generally, two teams work together. The first group focuses on transcribing speech into text, and the second group focuses on matching time code. This is a time-consuming task since there are lots of things to be considered when we create subtitles. The two most important things to determine are Characters Per Line (CPL), and the sentence line break, which means where we should split a sentence when the sentence is longer than the CPL.

If there are too many characters per line, studies have determined that excessive eye movement makes people uncomfortable. If the sentence line break happens in a weird position, the context may not be conveyed well. These are the two most time-consuming parts of the subtitle creation. In English subtitles, the CPL is generally set to 42 with a maximum of two lines visible, and there are many rules regarding the line break. Creating the template subtitle requires not only transcribing text and correcting time code, but also applying those rules properly.

Here is how AI technologies come into play. For example, XL8’s Skroll Sync uses Speech-To-Text (STT) technology to transcribe media into text and synchronize time code. Skroll Sync is doing more than simple STT. Skroll Sync uses AI-generated models to follow the CPL rule and tries to give the best line break as a result. After getting time-coded speech results from STT, Skroll Sync passes them to the post-processing AI models and will generate a near-production ready subtitle so that users don't need to spend time on post-editing to adjust CPL and line breaks.

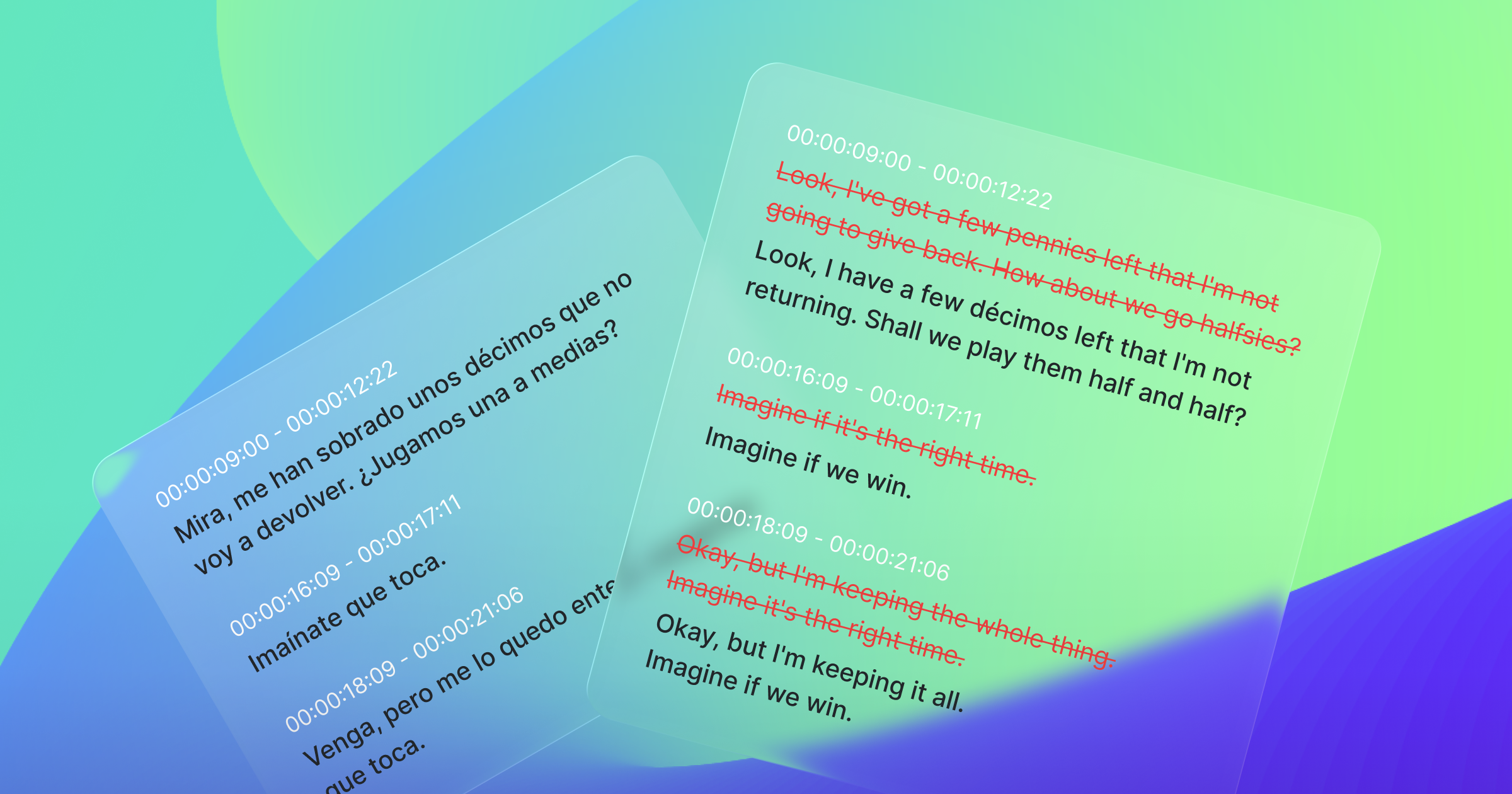

After generating template subtitles, the next step is to translate them into several languages, a task called Transcreation. Transcreation is the merger of two words: translation and creation. It’s an intricate form of translating that preserves the original intent, context, emotion and tone. In addition, we also may need to apply the correct units for target language countries. (i.e., converting from miles to kilometers).

Here is where XL8’s Skroll Translation comes into play. XL8 Skroll Translation takes subtitle files, reads a whole subtitle as one document, and tries to keep the context between sentences. Even further, it converts each unit into a proper target language country’s measurement unit as well. If you translate an English subtitle to a Korean subtitle, you can see that miles are converted into proper kilometers. This feature reduces time in post-editing.

XL8’s Skroll Translation does more than convey context and convert units. For example, the translated subtitle still requires the proper CPL, which means translators should keep the character per line limit even for the translated text. Furthermore, each language has its own rules for multi-speaker dialogues, ellipsis rules, and more. If you have examined Netflix’s timed text guideline, you may be shocked by the number of language rules. XL8 Skroll translation is designed for media, and all of these rules are supported.

By using AI for the initial steps, the workload is reduced significantly. We call this the MTPE process: Machine Translation and Post Editing. Here is the workflow diagram using our MediaCAT platform:

By letting AI technology conduct general translations, unit conversions, and characters per line, translators can focus more on adaptation and creativity. They don’t need to spend their time on mundane tasks. We believe this is the future for transcreation: allowing translators more time to focus on their art, not processes that AI can perform.

Finally, the perfect end result will be delivered to your screen. Now you’ve explored the entire process of subtitle creation. Subtitles are not just transcriptions or translations. It requires effort to make sure you can enjoy the show in the language of your choice.

By letting AI technology conduct general translations, unit conversions, and characters per line, translators can focus more on adaptation and creativity. They don’t need to spend their time on mundane tasks. We believe this is the future for transcreation: allowing translators more time to focus on their art, not processes that AI can perform.

Finally, the perfect end result will be delivered to your screen. Now you’ve explored the entire process of subtitle creation. Subtitles are not just transcriptions or translations. It requires effort to make sure you can enjoy the show in the language of your choice.

Written by Jay Jinhyung Park, CTO & co-founder at XL8 Inc.