Media Frame Rates

Have you ever made a flipbook animation on the corner of your notepad? It does not take many pages to trick your mind into thinking your doodle is moving. Movies and television shows operate on the same principle of apparent motion. The only difference is that they flip through a larger number of still images at a much faster rate.

In filmmaking, a frame refers to a single still image in a video, and a frame rate, commonly denoted as frames per second (fps), determines the number of frames displayed in a single second. Whether or not you are familiar with media production, you have likely encountered phrases like “24 fps”, while gaming, recording videos on your phone, or buying a new television. This article will briefly go over the history of frame rates and explain the significance of frame rates in media localization.

History of Frame Rates

Some of the most common frame rates are 24, 25, 30, and 60 fps, but less intuitive frame rates like 23.976 fps and 29.97 fps are also widely used. How did these specific frame rates come into existence, and why are there so many different frame rates?

Back in the early silent film era, there were no standardized frame rates. Most films were produced at frame rates ranging from 16 to 24 fps. Since cameras and projectors at the time had to be manually operated by hand cranks, the frame rates were determined by the speed at which they were cranked. Films had recommended projection speeds, such as “90 feet per minute”, to which projectionists were expected to adhere. Unfortunately, it was common for theaters to show the films at a faster speed, so they could get in an extra showing.

You may have come across old black-and-white footage that appears to be accelerated. Some filmmakers like Charlie Chaplin seemed to have deliberately used faster projection speeds for comedic effects. The following YouTube video illustrates the difference when viewing a Chaplin film at real-time speed versus an accelerated speed. However, much of the old footage we encounter today might simply be incorrectly converted, using higher frame rates.

The introduction of sound in films is what forced the standardization of frame rates. Without sound, it was acceptable to project a 16-fps film at 24 fps, and the 50% speed increase even added a certain charm to the film. However, changes in audio are much more perceptible to the audience. Even a 10% increase in audio speed would result in noticeable pitch change, but the picture and sound must also be in sync. Several companies embarked on developing a new system for “talkies” and the first one to succeed was Warner Brothers’ Vitaphone. It was used in the 1927 film “Jazz Singer”, which was the first feature film with sound. Incidentally, the frame rate used by Vitaphone was 24 fps, therefore 24 fps became the standard for filmmaking, and is still the standard today. It was not all luck though. 24 fps does offer some advantages, such as the fact that it is divisible by various numbers such as 2, 3, 4, 6, and 8, making it practical for the editing process.

Then came the television. In 1941, the National Television System Committee (NTSC), established by the US FCC, issued the black-and-white television technical standard for the United States. The NTSC standard uses 30 fps instead of 24 fps. Because the alternating current power in the US uses 60 Hz frequency, and because the frames were sent interlaced, alternating between even-numbered lines and odd-numbered lines, it was natural to use 30 frames per second. The mismatch between the frequency of AC power and the refresh rate could produce rolling bars on the screen due to intermodulation.

So far everything sounds reasonable, but then came the color television. Black-and-white televisions were already popular in homes, so the new color broadcasting had to be compatible with existing black-and-white receivers. This was achieved by adding a color subcarrier to the existing black-and-white image, but the color subcarrier signal interfered with the audio signal, producing a dot pattern on the screen. The solution for this problem was to simply slow down the frame rates by 0.1%. This is how we came to use 29.97 fps, or 30,000/1,001 fps to be precise. Meanwhile, in Europe, two other color television standards, called PAL and SECAM, were introduced. Europe uses 50 Hz AC electricity, so logically, PAL and SECAM use 25 fps.

.png)

Due to AC power frequency rates, television broadcast ended up adopting different frame rates than film, however the majority of film equipment used 24 fps. In order to broadcast television shows and films produced at 24 fps, they had to first be converted to the broadcast standard 29.97 fps. The conversion involves slowing down the films by 0.1% and using a process called 3:2 pulldown, which essentially converts every four frames into five frames. If you are producing something for television broadcast, it makes sense to film it at 0.1% slower speed from the outset, leading to the popularity of 23.976 fps. Some round it up to 23.98 fps, but this rounding error can lead to inaccuracies for a long video.

In countries that adopted 25-fps standards, 24 fps or 23.976 fps films were simply sped up to 25 fps. This reduces the running time by approximately 4%, but it is not substantial enough for audiences to notice. However, the audio pitch does become slightly higher, which can be bothersome for more sensitive viewers, so the audio pitch is sometimes corrected.

Thanks to the advent of digital broadcasting, the NTSC standards have been replaced by the ATSC standards. The ATSC system supports various frame rates, including frame rates mentioned earlier as well as higher frame rates such as 59.94 fps and 60 fps. As a result, there is no longer a need for awkward frame rates like 23.976 fps, but it is likely to take a long time until they completely disappear.

Although 24 fps has been the industry standard Film frame rate for a long time, some filmmakers are experimenting with higher frame rates. For example, movies like Avatar and The Hobbit were filmed at 48 fps, and there are a few films shot at 60 fps or even 120 fps. Higher frame rates have definite advantages in certain areas like sports broadcasting, but there is still skepticism about their use in films, with some arguing that they look too “real” and lack a cinematic look and feel.

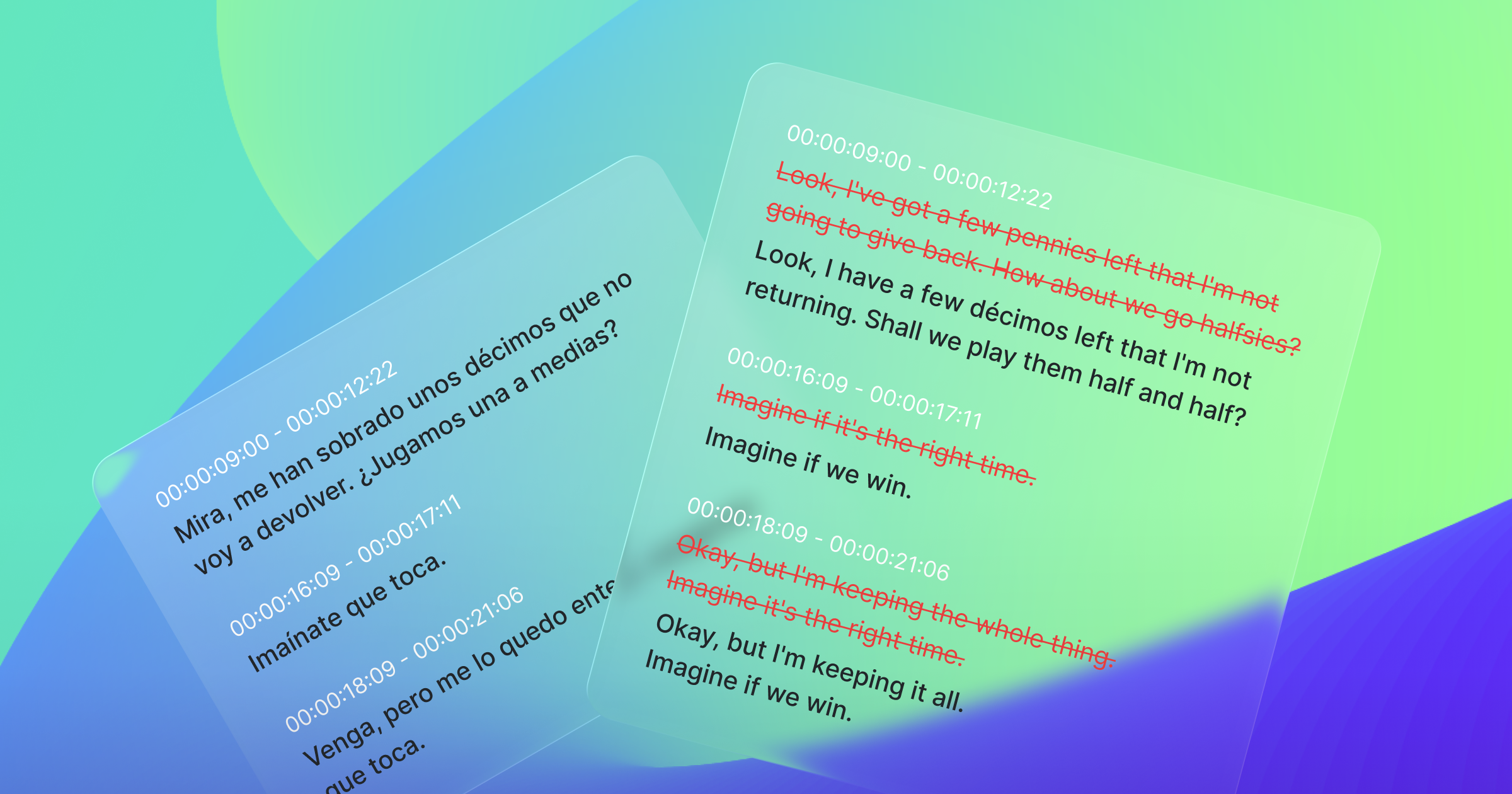

Subtitles and Frame Rates

Subtitle and frame rates may not seem directly related since subtitles are generated to support the audio. However, since subtitles are to be displayed over the pictures, they must also be taken into consideration. For instance, if someone started speaking near a scene change, it looks better to start showing the subtitle at the frame of the scene change, rather than at the start of the speech. Similarly, it is preferable to hide the subtitle before the next scene changes if possible. Some say that when a scene changes while a subtitle is on the screen, viewers may mistakenly believe the subtitle has changed as well and could start re-reading the same text. To help you address these issues, the subtitle editor in XL8 MediaCAT displays a thumbnail of every frame and highlights scene changes on the audio waveform.

.png)

Streaming services and broadcasting networks often have their own detailed guidelines regarding subtitle timing. For example, they may require subtitles to appear exactly at the scene change if the speech starts within 0.5 seconds after a scene change. Likewise, any subtitle disappearing within 0.5 seconds before a scene change should be extended so it disappears two frames before the scene change. MediaCAT Sync has a “Timing Style” option that automatically adjusts the timecodes based on the common rules. Of course, these rules are not absolute, and they may even conflict with each other in some cases. It is important for media localization experts to exercise their judgment to create subtitles that provide the most comfortable viewing experience. Our goal is to help you efficiently make those decisions and painlessly apply them.

Subtitles and Timecodes

Timecodes are used to indicate the specific moments when subtitles appear (referred to as “cue-in”) and disappear (“cue-out”). Depending on the subtitle formats, timecodes are based either on milliseconds or frames. Generally, subtitle formats commonly used by the general public tend to use milliseconds, and those employed in the professional environment, where specifying exact frames are important, tend to use frames. For example, SRT and WebVTT formats use milliseconds, while STL, PAC, and SCC formats use frames.

Timecodes could be represented as hh:mm:ss.SSS, where SSS is milliseconds (e.g. 01:10:25.500), while timecodes with frames are usually represented as hh:mm:ss:ff, where ff is a frame number (e.g. 01:10:25:15). In timecodes with frames, each frame within every second will be numbered from 0 to 29, assuming a frame rate of 30 fps. The 15th frame, which is exactly in the middle, corresponds to 0.500 seconds, so 01:10:25:15 represents the same moment as 01:10:25.500. This timecode format with frame number is referred to as SMPTE timecode, because it was defined by the Society of Motion Picture and Television Engineers. Many localization service providers receive videos with SMPTE timecodes burned in on the frames, known as BITC, or burnt-in timecodes.

Converting between seconds and frames is a straightforward process. Simply multiply the seconds by the fps value and round it down to obtain the frame number. Conversely, dividing the frame number by the fps value yields the corresponding seconds. Note that the same timecode in milliseconds can have different timecodes in frames, depending on the frame rate. Some subtitle formats do not contain the video’s frame rates, so it is crucial you specify the correct frame rate when importing or exporting the subtitles in these formats.

You may be curious how you can use SMPTE timecodes with non-integer frame rates like 23.976 fps or 29.97 fps. Two workarounds exist: non-drop frame timecodes and drop frame timecodes.

Non-drop frame timecodes (“NDF”) simply treat 29.97 fps media as 30 fps, assuming 30 frames correspond to a single second. Since 60 * 60 * 30 = 10,800, the 108,000th frame will be 01:00:00:00. However, since the media is actually 29.97 fps, the frame at the true one-hour mark is 107,892nd frame (60 * 60 * 29.97). Like this, non-drop frame timecodes introduce a discrepancy of 3.6 seconds every hour between the timecode and the actual time.

Drop frame timecodes (“DF”), as the name suggests, drop some frames to correct this discrepancy. Typically used for 29.97 fps only, the first two frames of 30 frames are dropped every minute. For instance, the frame after 00:00:59;29 becomes 00:01:00;02. However, frames are NOT dropped every tenth minute, so frames like 00:00:00;00 and 00:10:00;01 still exist. Think how a leap year is used to fix the discrepancy between a calendar year and Earth’s orbital period, and you will understand drop frame timecodes easily. Drop frame timecodes reflect the real running time more accurately but they can be confusing and inconsistent. They are also not completely precise since it compensates for 29.97 fps instead of 30,000/1,001 fps. They still possess a discrepancy of 0.1 frames every hour. To distinguish between non-drop frame and drop frame timecodes, the latter use a semicolon (“;”) instead of a colon (“:”) between seconds and frames.

The following table showcases three different frames represented in three types of timecodes. Notice how the disparity increases as time progresses.

Table [Comparison of timecodes in 29.97 fps]

To convert between different types of timecodes, it is recommended to first convert them to frame numbers and then to the desired type. Converting between frame numbers and milliseconds or non-drop frame timecodes is a relatively simple process. However, it gets more challenging when dealing with drop frame timecodes. To convert a frame number to a drop frame timecode, you need to calculate the number of frames that need to be dropped up to the given timecode, add that number, and then convert it to the timecode. If you would like a detailed explanation of the process, refer to the following link.

Regardless of the type of timecodes you use, it is crucial to maintain consistency and to use the correct frame rates. While importing any data, make sure timecodes are correctly converted. For example, in MediaCAT, the actual playing time is always used. If you downloaded a subtitle in a subtitle format with frame-based timecodes, and then imported it to software that expects non-drop timecodes, they may appear fine at the beginning of the video but will gradually deviate further as time progresses.

Hopefully this article provided you with the context regarding various frame rates and how they impact the media localization process. MediaCAT is an AI product that streamlines your media localization workflows. Our goal with MediaCAT is to allow you to think less about these technical details and focus on what matters, creating frame-perfect subtitles for your media efficiently. Click here to find out more about MediaCAT!

Written by Haisoo Shin, Senior Software Engineer at XL8