Without a doubt, the transformation of content creation and consumption is being reshaped by artificial intelligence, machine learning, and deep learning. But what’s happening at the intersection of AI and diversity? How can AI improve diversity, equity, inclusion, and accessibility, when it’s quite clear that if the available data is biased, then an AI system will also be biased? So, we have quite a challenge on our hands when it comes to cultural representation and embodying a full range of perspectives, experiences, and sensitivities.

Diversity is pivotal for organizations and industries to evolve and thrive. Making meaningful progress on diversity, equity, inclusion, and belonging (DEIB) is an important organizational shift that many companies are grappling with. Diversity and inclusion are so much more than gender equity; they also comprise age, origins, culture, education, physical and psychological ability, sexual orientation, neurodiversity, religion, and professional and life experience. There is also substantial research showing that diversity brings many advantages to an organization including increased problem-solving abilities, increased creativity, and better business performance. Therefore, we’re well aware that a shift in mindsets, behaviors, and practices can help all attain their full potential.

How Diversity and AI Impact the Media Industry

Promoting diversity and inclusion in the media and entertainment industry is crucial for fostering creativity, and representing diverse voices, stories, and talent. Authentic storytelling contributes to a more inclusive landscape, and this content ultimately affects audiences in both positive and negative ways. As more and more companies look to deploy AI systems across operations, it’s critical to be aware of how human biases and lack of diversity can make their way into AI models, ultimately extending across the entire media chain and to the consumer audience.

AI’s Value in Battling Bias

Both humanity and AI follow a broad spectrum, and bias will surely never be completely eliminated, as it’s how humans think. However, AI can add value in revealing the dynamics of interactions between people. It can also reveal some of the silent forces that determine why certain people are more likely to be promoted or not. It can also measure inclusion through data mining capabilities, which helps us go beyond our perceptions. AI can help us decode the data, and probe algorithms for preconceptions, perhaps revealing human biases that may have gone unnoticed. Ultimately helping to identify and reduce the impact of human biases and help us overcome them instead of perpetuating them.

The Double-Edged Sword

Simply put, biased input equals biased output. AI models and algorithms learn from data input and are trained against a chosen dataset, which can include biased human decisions or historical social inequities. Plus, the output of content from an AI model cannot accommodate data that it hasn’t experienced or received yet. Therefore, AI bias stems from the selection of the data itself resulting in discriminatory results. Here are a couple of examples that show these types of distorted results: Amazon stopped using an AI hiring algorithm after finding it favored applicants based on the words ‘captured’ and ‘executed’ that were commonly used in men’s resumes. Facial analysis technologies have shown higher error rates for minorities due to unrepresented data fed into an AI model. There are many more examples of artificial intelligence resulting in discriminatory results when broad diversity, inclusions, and equity aren’t taken into consideration.

Tackling Diversity in AI

If there is a lack of diversity among the engineers and teams who develop and build AI systems, biases will be reinforced based on who’s building them. This could propagate bias on a large scale and spill over into the resulting technologies, creating harm for entire groups of people. It’s in our hands to take steps to increase diversity among AI professionals so that the output from AI models represents the broader global society. This starts at the educational (well before college and university) and mentorship level, offering opportunities at all stages to shape the AI future. The guidance and data audits, however, should also be driven by the humans who design, train, and refine AI systems. And yes, technical expertise is essential, however, so are the diverse human perspectives that provide unique viewpoints on data, its collection, and privacy.

AI tech companies, researchers, and developers have a responsibility to investigate and interrogate current data for lack of diversity, and bias red flags, continuously and regularly. This step could illuminate biases and discrimination, including bias detection techniques, and is essential for the future of fair AI. Improving the transparency of current and future data sets will help AI models learn from past mistakes and hold organizations accountable for the AI models that they release. Recognizing the significance of diversity and its impact on the accuracy of AI solutions, along with employing algorithmic fairness techniques will help to prevent the reinforcement of existing biases that perpetuate stereotypes.

Promoting Diversity to Eliminate Barriers

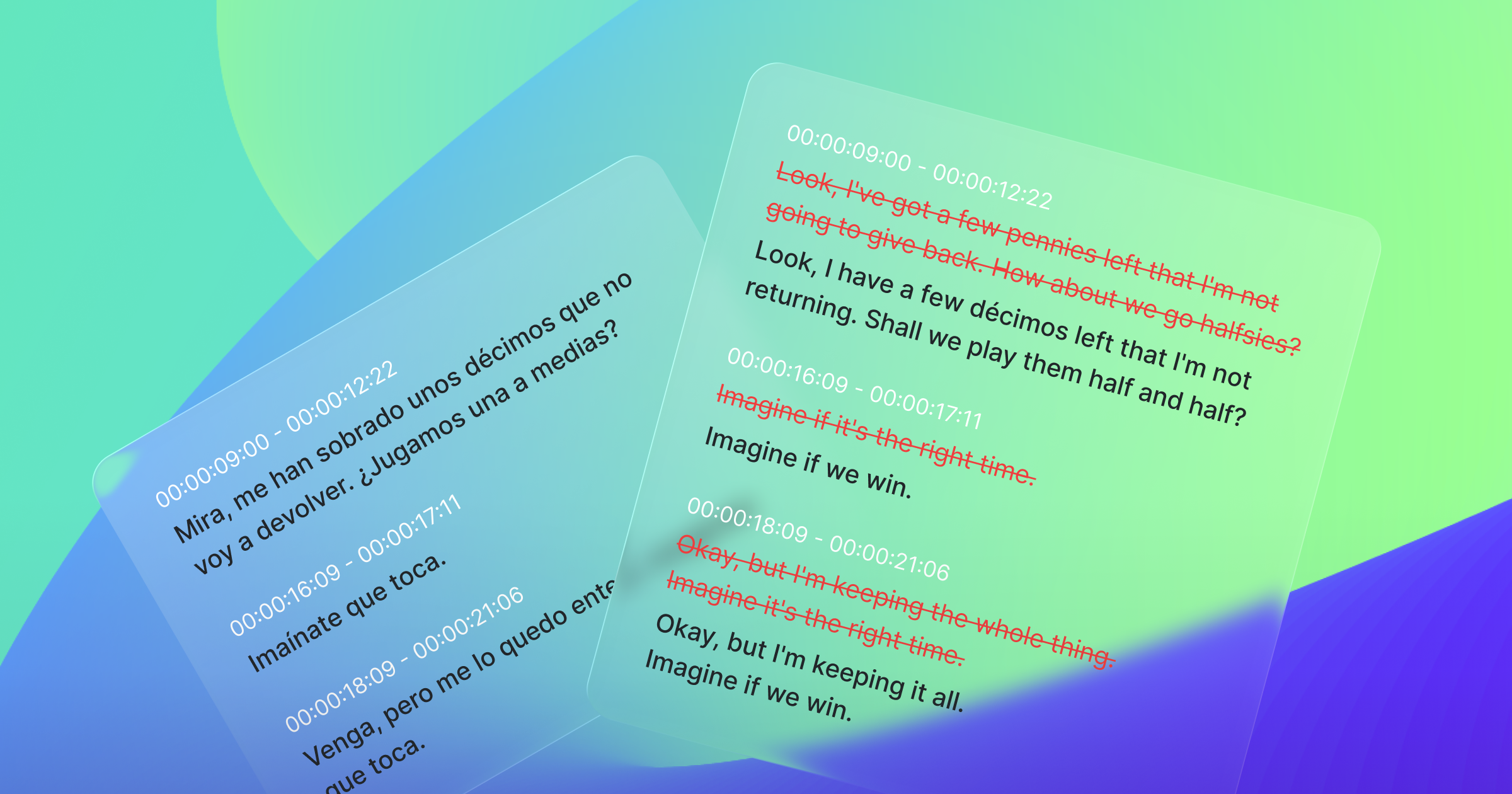

Our mission at XL8 is to help the world communicate by eliminating barriers of languages, regions, or circumstances through our AI technologies. We’re focused on elevating our thinking as we design our AI models, and carefully refine data to remove biases and enhance diversity.

We’ve been working on multiple diversity projects with the Korea Center for Gendered Innovation for Science and Technology Research, the Women in Science, Engineering, and Technology group, and the Leeum Art Museum’s events focused on multicultural family experiences. These organizations are using the XL8 AI-powered media localization tool, MediaCAT to localize video content and presentations through dubbing, transcriptions, and subtitles. Our EventCAT live streaming service, which combines AI technology and machine learning with speech-to-text for multiple languages is also helping to break down diversity and inclusion barriers for these organizations and those that attend the events.

While technological advancements in AI are emerging as critical to addressing DEIB challenges, and helping organizations meet their diversity objectives, even those with good intentions have innate biases that drive the design of algorithms. After recently coming back from the OTTX Diversity Conference, many of my conversations involved similar conclusions; we need more people with different training, different perspectives, and different backgrounds coming into the AI and media industries. We have the challenge of confronting and dismantling these biases so that emerging technology can be more inclusive and diverse.